Both technologies are used for object detection in autonomous vehicles, but how do they compare?

Autonomous vehicles typically deploy multiple sensor systems for environmental perception: LiDAR, radar, and camera modules being the most popular. These sensor systems work in concert, providing a comprehensive representation of the outside world—vehicles, pedestrians, cyclists, signs, and more—and their overlapping capabilities also create redundancy, ensuring that if one system falters, another is there to fill the gap.

Radar is a component of most automotive sensor suites. A relatively low-cost, reliable, and time-tested technology, radar is capable of detecting larger objects at reasonable distances and performs well in low-light and bad-weather situations, both of which are strong advantages in automotive. However, because radar struggles to detect smaller objects and identify the objects it does detect, radar is only one component of the solution—it acts as an important complement to a primary sensing modality, such as LiDAR or cameras.

LiDAR technology provides distance measurements by measuring the time that a laser signal takes to bounce off of an object and return to a local sensor. Cameras—when used in pairs (e.g., stereo cameras)—also provide distance estimation, in this case based on the result of triangulation of visual information taken from two different points of view (i.e., the two cameras).

Stereovision and LiDAR each have the capabilities required to serve as the primary sensor system for vehicular autonomy—and, notably, can be deployed simultaneously to create redundancy. So, what are the pros and cons of these two sensing modalities?

A tale of two technologies.

Both stereo and LiDAR are capable of distance measurement, depth estimation, and dense point cloud generation (i.e., 3D environmental mapping). Both produce rich datasets that can be used not only to detect objects, but to identify them—at high speeds, in a variety of road conditions, and at long and short ranges. Both have advantages and disadvantages. Is there an obvious winner?

As mentioned previously, LiDAR uses a similar principle as sonar. It establishes distance between the vehicle and the environment by emitting laser pulses and measuring the time it takes for those signals to ricochet off of objects and return to a receiver. The greater the number of signals, the greater the number of possible distance measurements—more is undeniably better. Typically, this is accomplished by enabling multiple lasers that rotate (either physically or via logic) to scan the environment around the vehicle in a 360-degree field.

LiDAR has been an important sensing technology throughout the history of autonomy. Pioneering self-driving prototypes relied on LiDAR because of its precision distance measurements, reliability, and ease of use. Most competitors in the DARPA-sponsored autonomous challenges that began in 2004, for example, relied on LiDAR technologies.

The strengths of LiDAR are evident—so evident, in fact, that a majority of modern autonomous prototypes continue to deploy it as the primary sensing modality today. Its strengths include:

- High precision (distances measured to the centimeter)

- High data rate (mechanically rotating LiDARs provide 20 or more revolutions per second)

- Stable and reliable

- Proven

- Detection is not influenced by temperature or light

In spite of its many strengths, however, LiDAR does have certain technological limitations.

The weaknesses of LiDAR include:

- Adverse weather performance—false positives can be created by reflections caused by rain, fog, and dust. While these issues can be managed via dedicated algorithms, weather can still present issues for LiDAR-based systems.

- Eye safety regulations place a limit on LiDAR’s signal strength. This limitation introduces a mandatory trade-off between field of view (FOV), resolution, and distance.

- The effectiveness of LiDAR measurements is tied to the reflectivity of the object. If the emitted signal encounters a reflective object, most of its energy rebounds back to the receiver as intended, and the object is detected successfully. However, if the signal meets an obstacle with poor reflectivity—if a vehicle is black, for example—the signal’s energy may only return fractionally, and the reliability of detection can decrease as a result. Fortunately, most cars, motorcycles, bicycles, and pedestrians are considered sufficiently reflective for detection via LiDAR, which again, is why it has been deployed so widely in the field.

So, what about stereovision? How does it compare?

Stereovision refers to a technique that allows distances to be estimated via the processing of two separate images of the same environment, captured from two nearby points of view simultaneously. In the early years of vehicular autonomy (late 90s to early 2000s), the science of computer vision was in its infancy. This and other factors created a number of issues that prevented stereovision from immediate consideration as the primary sensing modality for self-driving.

Most notably, stereo suffered from:

- Poor long-distance imagery from low-resolution cameras

- Poor performance in low-light environments

- High computational resource requirements (multiple PCs were required onboard for computer vision processing)

- Cameras becoming un-calibrated during driving, requiring manual adjustment

At the time, these problems were significant enough to prevent stereovision from being deployed as a viable alternative for autonomous sensing. In the absence of competition, LiDAR flourished.

Since then, however, significant developments have occurred that make stereovision a more attractive candidate:

- Low-cost, high-resolution cameras (8-megapixel cameras are readily available today)

- Advanced ISPs with HDR and low-light image processing for night driving

- Embedded SoCs designed explicitly for real-time computer vision processing

- Automatic, on-the-fly camera calibration (more on this feature later)

These developments, taken as a whole, have transformed stereovision from a niche self-driving technology to a strong contender as the primary sensing modality for vehicular autonomy.

How does stereovision perform in today’s autonomous vehicles?

The most important performance index when considering an obstacle-recognition technology is resolution: how many distance measurements/estimations can it deliver per second? The higher the number, the more accurate the 3D representation of the world around the car. In general, stereovision can deliver approximately 2,000 vertical samples per second using today’s generation of cameras. When one compares this number to LiDAR’s 128 vertical samples per second, it becomes clear that stereovision is capable of delivering superior resolutions.

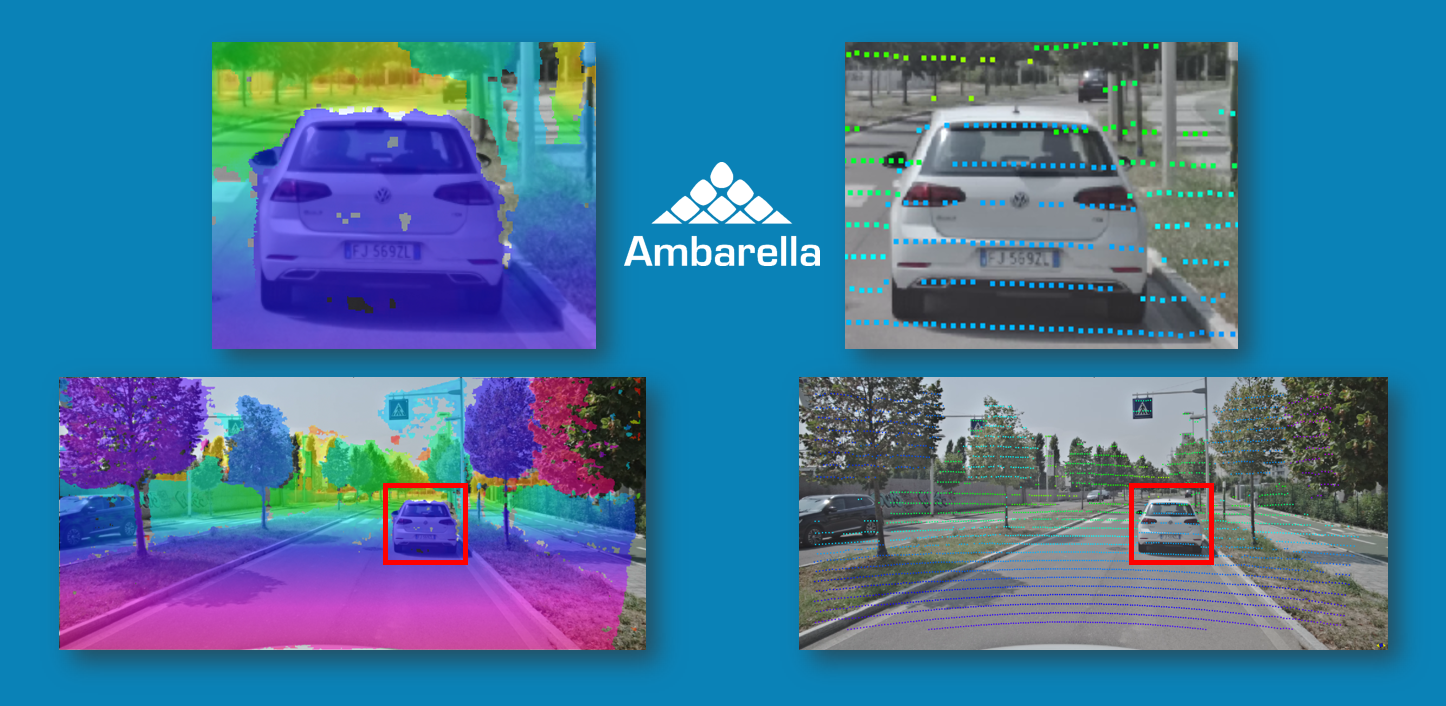

Looking at the comparison image shown above (in which colored pixels in both images represent the sensor’s measurements, and the color indicates the measured distance on each sensor’s scale), we can see the greater environmental coverage provided by the stereo solution on the left, while the LiDAR-based output on the right shows a much sparser overlay.

Examining the image more closely using the zoomed-in version below, we can see the differences more clearly: the data generated via stereovision is richer, which in turn, makes obstacle detection easier.

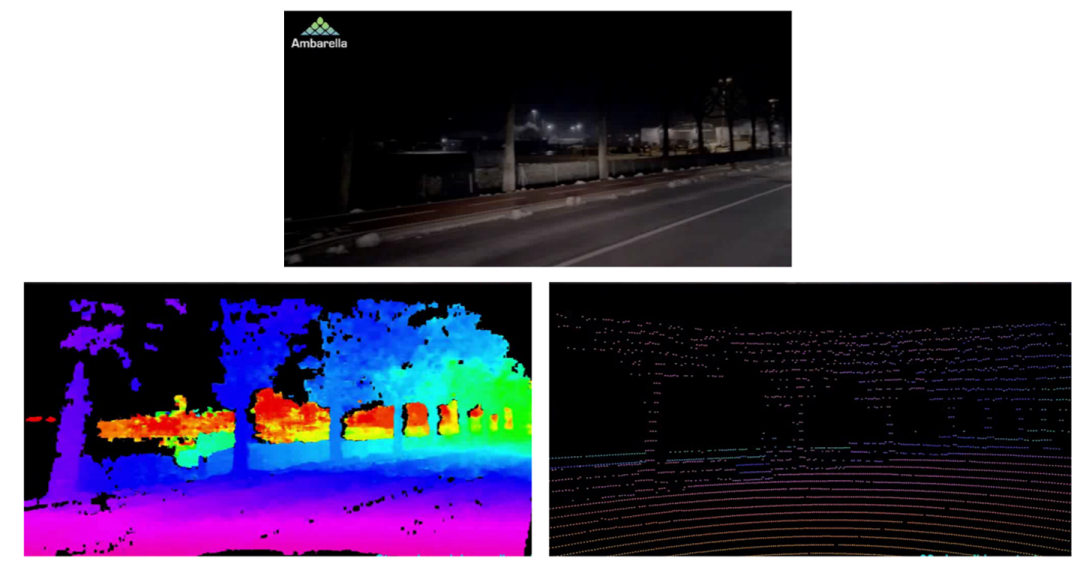

While the demonstration presented above occurred during the day, stereo resolution is also strong in low-light scenarios. The image below shows a similar density comparison during night driving.

Video link: https://youtu.be/QZM-5dIZSJk.

Accuracy, of course, is another important metric. Stereovision doesn’t provide direct distance measurements; rather, it provides distance estimations. With stereo, distance is a derived quantity, an indirect measurement obtained by processing two brightness images. Regardless, stereovision is more than capable of delivering the level of accuracy required for autonomous applications at both long ranges (where the precise measurement of distance isn’t mandatory) as well as short ranges (where high precision is required to perform precise maneuvers). At short range, for example, stereo can provide sensing at millimeter-level distances.

Besides resolution and accuracy, what are other strengths of modern stereo?

- Each of the two cameras in a stereo pair can double as independent monocular cameras, providing a built-in redundant system.

- The dual images provided by stereo cameras can be used to execute monocular CNN algorithms in parallel, such as object classification, all on a single chip.

- Stereovision provides the capability to detect generic 3D shapes, even those which haven’t been classified as known obstacles. Rocks, assorted debris, or random objects dropped from another vehicle (e.g., a ladder or mattress) will be detected by a stereo-based system. Even negative obstacles, such as holes, can be accurately detected.

- Stereo cameras are relatively inexpensive, an important consideration for high-volume applications. They also have no moving parts, can be auto-qualified, and consume minimal power.

- Stereovision can run at frame-rate speeds (30 fps for ultra-HD images). Because our stereo engine is hardwired onto our stereo-capable chips, Ambarella’s stereovision can generate extremely high data rates.

- Stereo camera auto-calibration. For stereovision to function, the positions of the two cameras must remain fixed relative to each other; otherwise, the measured data will be incorrect. Typical driving conditions, where vibrations and shocks are the norm, can be challenging for stereo-based systems. Recognizing this, Ambarella has developed a real-time auto-calibration procedure that can compensate for small camera movements that typically occur during normal vehicle operations. This ensures that our stereovision processing remains precise.

For information about our stereo-capable SoCs (CV2FS and CV2AQ are our current stereovision-enabled parts), please click here.