Autonomy starts with how the vehicle perceives a three-dimensional world.

An essential task in autonomous driving is environmental perception—interpreting an ever-changing, three-dimensional world on the fly.

Which methods will the vehicle use to understand and respond to its surroundings? How will the system sense depth, especially while in motion? And what if the objects in its path are completely novel and unfamiliar? Meaning, what if the system hasn’t been trained to recognize a particular obstacle in its path?

The traditional approach to autonomy has been to use a combination of depth sensing techniques: LiDAR and radar being the most common (paired with GPS, plus extremely accurate maps of the terrain). Performing depth estimation from camera data has also become popular in the industry; however, as the name implies, this technique provides an estimate of distance rather than precise measurements. Stereo cameras, on the other hand, are capable of delivering precise distance measurements and can offer significant advantages to autonomous applications.

Why is stereovision important to autonomy?

Stereovision—3D depth map generation using information from two synchronized, auto-calibrated cameras—is an important component of visual perception, motion prediction, and path planning in autonomous systems. While LiDAR, another commonly used technology for measuring distances, is also capable of accurate 3D object detection, and monocular cameras can be used to infer or predict depth-related information, stereovision has unique advantages in delivering a highly detailed and accurate 360-degree understanding of the 3D environment.

Let’s look at a brief example. The video below shows a simple scene: two people passing a soccer ball back and forth. Note how the stereo camera can effectively perceive small details in the environment, including the limbs of both players as well as the dynamic movements of the ball itself. Consider the size of these objects: a soccer ball is approximately nine inches (22 cm) in diameter, while the average human ankle has a diameter of only four inches (10 cm). Also note the distance of the camera from the players—approximately thirty feet (10 m).

While the scene depicted in the clip above isn’t as challenging as typical roadway scenes—where lighting conditions can vary dramatically, and vehicles, pedestrians, bicycles, debris, and other obstacles are the norm—our stereovision solutions are designed to perform effectively in complex environments as well.

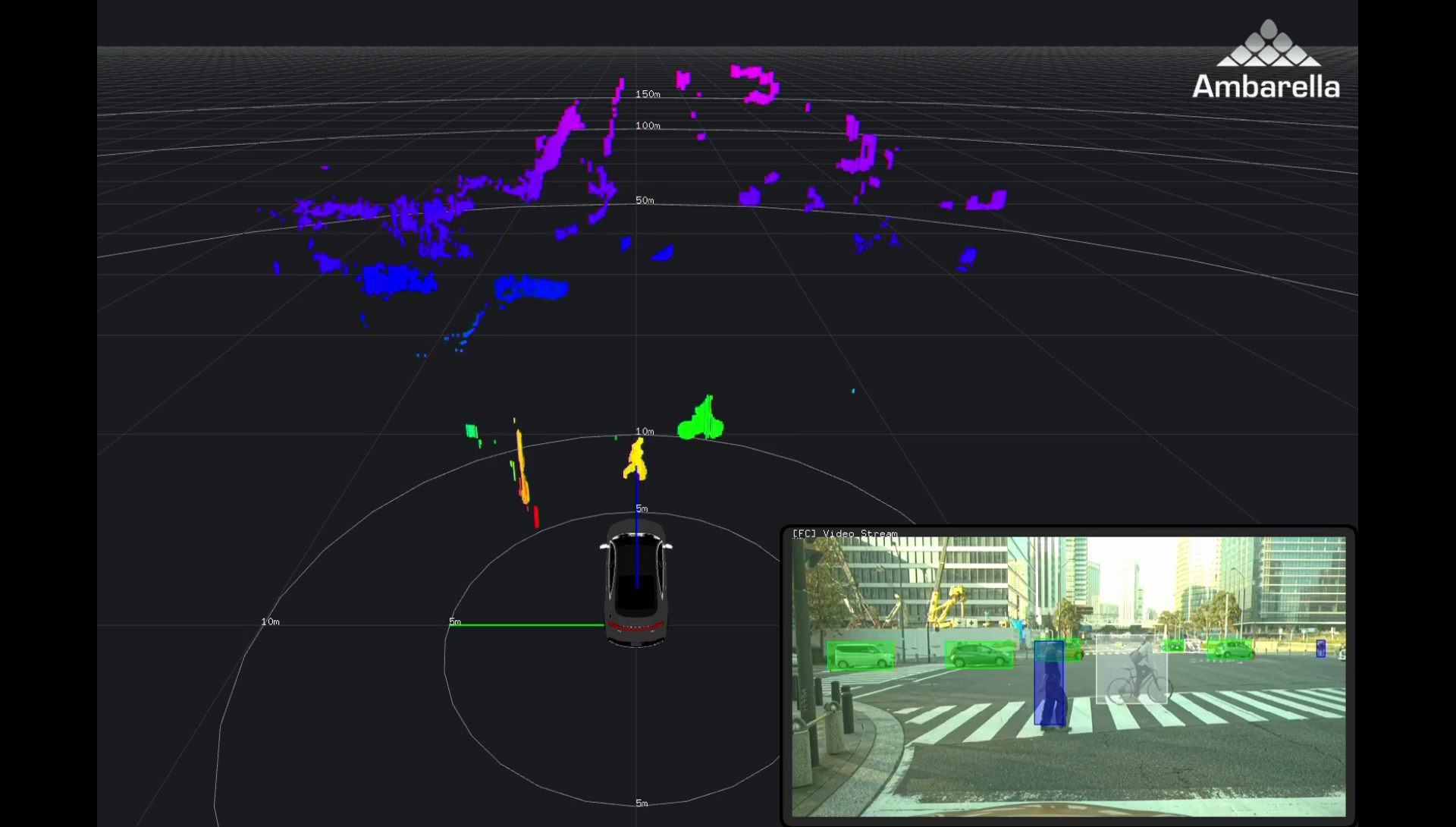

Looking at the screenshot below, captured with a stereo module on one of Ambarella’s autonomous EVA vehicles, we can see an image of an urban intersection complete with vehicles, pedestrians, a bicycle, and obstacles such as bollards and signposts near the curb.

Comparing the larger stereovision view with the inset image in the lower-right corner, we can clearly distinguish the signpost on the left, the pedestrian in the crosswalk, the nearby cyclist, the two closest vehicles entering the intersection from the left-hand side, as well as background elements on either side of the road. Note that the colors in the stereovision view shown here represent distances, with warmer colors (e.g., orange) indicating a greater proximity to the vehicle and cooler colors (e.g., purple) indicating longer ranges. On the other hand, the colors of the 3D bounding boxes in the inset view indicate object type: vehicles are outlined green, pedestrians are outlined blue, and bicycles are outlined white.

Stereo performance in challenging light conditions.

For a stereo-based solution to be effective on the roads, it needs to maintain accuracy in a variety of lighting conditions. Let’s look at nighttime driving, as an example. Stereo-based obstacle detection in the darkness (detecting both positive and negative obstacles such as potholes) requires robust depth estimation including a disparity validity measure to be successful. The video below offers a demonstration of Ambarella’s stereovision processing capabilities in a very low-light scenario on the road.

In the video above, the lower-left quadrant shows a stereo-based dense disparity view. Like the prior screenshot, the colors in this view represent distances; however, the color scheme is different. In this case, the violet color indicates proximity while red indicates remoteness, with the remaining colors depicting the various ranges in between.

When multiple outputs from both short- and long-range stereo camera modules are combined, it is possible to visualize the environment in striking detail via the generation of dense point clouds as shown in the video below.

How does Ambarella use data generated by stereo cameras on our vehicles?

- Depth mapping: Creating a depth map allows generic objects in the scene (ranging from vehicles and pedestrians to poles, trash bins, potholes, and debris) to be detected, including their accurate size, location, and distance without the need for the system to be explicitly trained.

- Road modeling: Different road shapes can be modeled accurately, which aids downhill and uphill maneuvers.

- Data fusion: Since color-related information is provided alongside depth data by the same sensor, it is possible to run monocular algorithms simultaneously (e.g., lane marking or traffic sign detection via CNNs) and then fuse this data with the depth map.

- 360-degree visualization: Stereo cameras can be used for short-range perception with fisheye lenses, enabling a 360-degree view of the scene during low-speed maneuvers.

Click here for more information on our computer vision chips.

For additional information, please contact us.